Resources

This project is maintained by Christopher Teo

Fair Generative Model via Transfer Learning (AAAI23)

| Christopher T.H.Teo | Milad Abdollahzadeh | Ngai-man Cheung |

|---|

Abstract

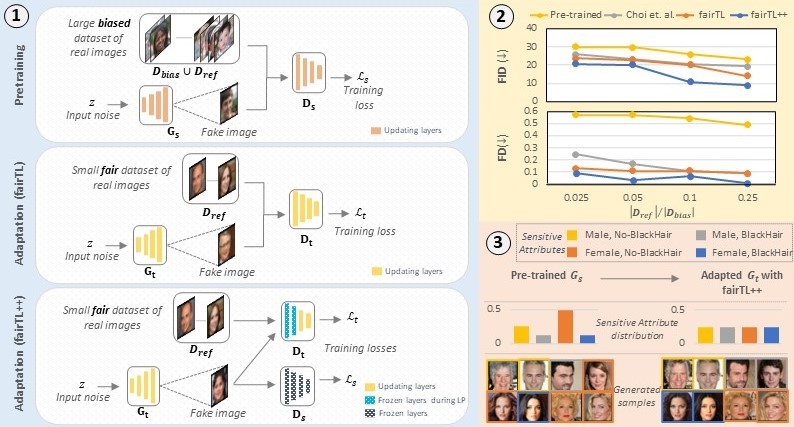

This work addresses fair generative models. Dataset biases have been a major cause of unfairness in deep generative models. Previous work had proposed to augment large, bi- ased datasets with small, unbiased reference datasets. Under this setup, a weakly-supervised approach has been proposed, which achieves state-of-the-art quality and fairness in gen- erated samples. In our work, based on this setup, we pro- pose a simple yet effective approach. Specifically, first, we propose fairTL, a transfer learning approach to learn fair generative models. Under fairTL, we pre-train the genera- tive model with the available large, biased datasets and sub- sequently adapt the model using the small, unbiased refer- ence dataset. Our fairTL can learn expressive sample genera- tion during pre-training, thanks to the large (biased) dataset. This knowledge is then transferred to the target model dur- ing adaptation, which also learns to capture the underlying fair distribution of the small reference dataset. Second, we propose fairTL++, where we introduce two additional inno- vations to improve upon fairTL: (i) multiple feedback and (ii) Linear-Probing followed by Fine-Tuning (LP-FT). Tak- ing one step further, we consider an alternative, challeng- ing setup when only a pre-trained (potentially biased) model is available but the dataset used to pre-train the model is inaccessible. We demonstrate that our proposed fairTL and fairTL++ remain very effective under this setup. We note that previous work requires access to large, biased datasets and cannot handle this more challenging setup. Extensive exper- iments show that fairTL and fairTL++ achieve state-of-the- art in both quality and fairness of generated samples.